According to Gartner, close to 50% of API security incidents in 2022 were directly attributed to misconfigured or inadequate authentication. This alarming figure highlights an essential truth: the security of your applications and APIs is fundamentally dependent on the effectiveness of the authentication methods and data encryption techniques you employ.

1. Enhance Authentication Through Multifactor Authentication (MFA) And Role-Based Access Control (RBAC)

i. The Rationale for MFA

In conventional single-factor authentication, users input a solitary set of credentials (commonly a password), which inherently carries considerable risks. A breached password acts as the sole barrier between an attacker and your application’s sensitive data. Multifactor Authentication (MFA) addresses this vulnerability by necessitating additional validation procedures, such as a one-time passcode dispatched to a registered mobile device or biometric checks. This supplementary security layer significantly diminishes the likelihood of unauthorized access, especially in light of the increasing prevalence of phishing and brute-force attacks.

Recent statistics indicate that organizations that have adopted MFA have experienced a reduction in account takeover incidents by as much as 99.9%. The implementation of MFA is uncomplicated, featuring widely-used solutions such as Authy, and Google Authenticator, along with enterprise-grade options like Okta or Azure AD. By incorporating an additional authentication factor, organizations substantially lower the threat of unauthorized access and enhance overall security posture.

ii. RBAC: A Vital Layer of Fine-Tuned Control

Role-Based Access Control (RBAC) represents another indispensable element for safeguarding applications. Under RBAC, access rights are allocated based on an individual’s role within the enterprise, ensuring that each user possesses only the permissions essential to fulfill their designated responsibilities. This strategy constrains access to sensitive information and mitigates potential harm in the event of an account compromise.

The application of RBAC entails meticulous alignment of organizational roles and delineation of detailed permissions. For example, a developer may require access to code repositories but not to financial data, while a system administrator might need visibility into network settings yet not into customer information. By refining access rights according to roles, RBAC significantly curtails the risk of excessive permissions—one of the key contributors to insider threats and unauthorized data exposure.

2. Secure Data Through Encryption Both In Transit And At Rest

i. Importance of HTTPS and TLS in Data Transmission

When data traverses the internet without encryption, it becomes vulnerable to interception, potentially revealing sensitive information. This risk has established secure data transmission as a necessity for applications and APIs. Transport Layer Security (TLS), especially its most recent iterations (TLS 1.2 and later), safeguards data in transit, making intercepted information indecipherable to individuals lacking encryption keys. Mandating HTTPS for all endpoints is a crucial measure; however, to ensure application security, verify that every API request and connection adheres to HTTPS, including redirection from HTTP to HTTPS to prevent security loopholes.

Salt Labs has discovered that more than 40% of operational APIs lack adequate encryption, resulting in plaintext data exposure and subsequent security breaches. This oversight is critical, as HTTPS represents a straightforward yet highly effective security measure. A TLS-secured HTTPS connection employs asymmetric encryption to protect the data exchanged between client and server, safeguarding sensitive materials such as authentication tokens, personal information, and transaction details.

ii. Encrypting Data at Rest: A Proactive Strategy for Secured Information

While it is vital to secure data during transmission, encrypting data at rest is equally important. Information stored on servers, databases, or cloud environments may be susceptible to threats, especially in multi-tenant settings. The process of data at rest encryption transforms information into ciphertext, which can only be accessed with the appropriate decryption key; this key should ideally be safeguarded within a hardware security module (HSM) or a comparable secure repository.

Encryption keys must be safeguarded through regular rotation and stringent access controls to avert exposure from unintentional mishandling or malicious intrusion. Database encryption can be set up either at the file system stage or inside the database, influenced by how sensitive the information is and the resulting performance effects. AES-256 (Advanced Encryption Standard) is a robust and widely adopted encryption protocol for data at rest, delivering a significant level of protection against possible breaches.

iii. Challenges and Essential Insights

- Mitigating Breach Expenses: The financial repercussions of data breaches are substantial. A recent analysis by IBM indicated that the average expense of a data breach in 2023 reached $4.45 million. These expenses encompass data recovery, reputational harm, and legal fees. Organizations can enhance security and lower potential expenses by utilizing multi-factor authentication (MFA), role-based access control (RBAC), HTTPS, and encryption for stored data.

- Enhancing User Confidence: Users are becoming more vigilant regarding the management of their data. Implementing robust security measures fosters trust within your user community and shows dedication to their privacy and security. For applications that handle sensitive user data, transparent security practices are imperative.

- Compliance and Regulatory Requirements: Numerous sectors, including healthcare and finance, face strict regulations concerning data management and protection. Following recognized best practices for ensuring authentication and encryption is essential for meeting compliance obligations like GDPR, HIPAA, and PCI-DSS, thus protecting your organization from fines and potential legal issues.

3. Limit Data Exposure and Utilize Parameterized Queries

i. Minimizing Data Exposure in API Responses

A crucial strategy for securing APIs is to reduce the volume of data revealed in responses. Excessive data exposure can furnish attackers with a plethora of sensitive information, transforming it into an extensive target for potential exploitation or abuse. Unintentional data exposure frequently occurs, as developers may craft API responses that contain more information than is necessary. For example, an API response concerning user data might include sensitive attributes such as addresses or internal identifiers, which should, in principle, be kept hidden from the client.

To mitigate this issue, it is vital to establish the minimal amount of data required in each API response, a principle referred to as data minimization. Executing this strategy necessitates close cooperation between API developers and stakeholders to ascertain the precise data requirements for every endpoint, thereby avoiding unnecessary data sharing and minimizing risk. The implementation of versioning and adherence to API management best practices can also prove invaluable, as it facilitates incremental modifications in API architecture without disrupting users.

ii. Defensive Coding with Parameterized Queries

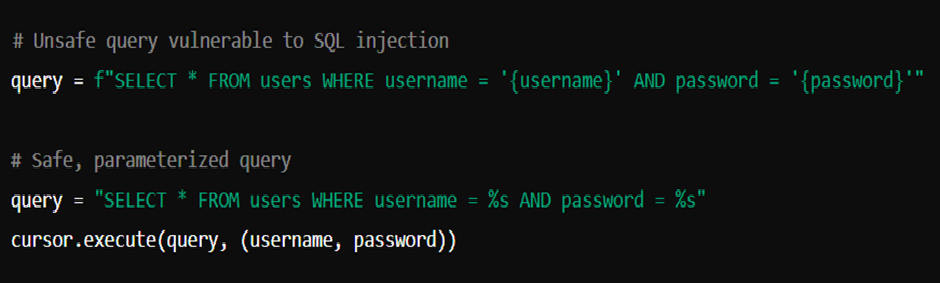

SQL injection continues to pose a significant risk to APIs, especially when user inputs are accepted without adequate sanitization. Attackers frequently exploit query vulnerabilities by injecting malicious SQL code, which can lead to unauthorized access or modification of sensitive information. In this context, parameterized queries provide a robust defense by distinguishing query logic from user input, effectively neutralizing the threat of injection attacks.

In the case of parameterized queries, placeholders represent user inputs, which are incorporated into the query structure solely after the input has undergone validation. Unlike conventional string concatenation methods for query construction, parameterized queries inhibit the execution of malicious code as SQL commands. Consider the following example:

In the second scenario that is more secure, user credentials (like usernames and passwords) are detached from the SQL query format, helping to alleviate the risks linked to SQL injection. Numerous databases, including PostgreSQL, MySQL, and SQL Server, inherently support the use of parameterized queries. Implementing these best practices across all database interactions enhances the security of the API.

4. Implement Rate Limiting and Throttling to Control API Misuse

Comprehending Rate Limiting and Throttling Rate limiting and throttling are critical components in the API management toolkit, especially for safeguarding against abusive or malicious entities that seek to overload systems through rapid, repetitive requests. Without these measures, APIs face risks from denial-of-service (DoS) strikes, endangering service availability by flooding the system with excessive amounts of traffic.

In basic terms, rate limiting constrains the number of requests that an API can process from a single client within a specified timeframe, such as “100 requests per minute.” Rate limiting serves to deter API misuse, such as automated scripts trying to harvest data or a malicious user attempting to exhaust resources through incessant requests. Conversely, throttling moderates the influx of traffic, reducing the pace of excessive requests instead of outright denying them. Throttling can assist in alleviating DoS attacks while maintaining a satisfactory user experience for legitimate clients.

i. Executing Rate Limiting with API Gateways

Most contemporary API gateways, such as Amazon API Gateway, Kong, and Apigee, provide integrated rate limiting and throttling functionalities. By establishing global constraints on API consumption, these gateways enable you to manage traffic volume and avert misuse. For example, Amazon API Gateway offers usage plans that impose limits on API requests based on IP address, user, or application. This feature is vital for scaling APIs while ensuring security.

To enhance rate limiting further, consider the adoption of adaptive rate limiting, which employs machine learning to adjust rate limits in real-time based on standard usage patterns and recent behaviors. For instance, if an API generally experiences a maximum of 1,000 requests per minute but suddenly encounters an unusual surge, the adaptive rate limiter can recognize this as potentially harmful activity and modify the rate limit accordingly. This sophisticated method of rate limiting aids in detecting and addressing emerging threats while minimizing the likelihood of false positives.

ii. Formulating a Comprehensive Rate Limiting Strategy

Crafting a rate-limiting strategy entails several considerations, including the anticipated usage patterns of the API, desired response times, and user expectations. Various endpoints may necessitate distinct rate limits based on their sensitivity and workload. For instance, a login endpoint should enforce a more stringent rate limit than a general search endpoint due to its vulnerability to brute-force attacks. Additionally, API rate limiting strategies ought to account for error management to convey appropriate responses to clients when limits are exceeded, such as HTTP status code 429 Too Many Requests for clear feedback.

5. Adopt Regular Security Testing and Vulnerability Scanning

Conventional “quarterly” security tests are inadequate in a milieu where adversaries become increasingly advanced and security standards change swiftly. Implementing continuous security testing empowers organizations to detect and address vulnerabilities immediately upon discovery, thereby reducing the risk exposure period. As per OWASP, regular and thorough security evaluations can prevent over 90% of prevalent vulnerabilities, including injection issues, flawed authentication mechanisms, and data leaks, which are often targeted in API-centric applications.

i. Automated Vulnerability Scanning

Automated vulnerability scanning solutions are integral to ongoing testing initiatives, equipping organizations with a forward-looking methodology for vulnerability identification. These tools examine APIs for recognized vulnerabilities, configuration errors, and potential security weaknesses. Platforms such as Nessus, OpenVAS, and Qualys perform continuous scans on APIs, uncovering problems ranging from outdated software versions to unsecured data pathways.

A major benefit of automated scanning lies in its capacity to execute rapid, extensive scans with minimal human involvement. For instance, APIs that are in development or testing phases can undergo automatic scans with every deployment, ensuring that vulnerabilities are detected in their infancy. Nevertheless, automated scanning has its constraints. It can only identify known vulnerabilities derived from established databases, implying that it may not recognize novel or unidentified exploit patterns.

ii. Manual Penetration Testing: Going Beyond Automation

While automated vulnerability scanners establish a foundational level of security, manual penetration testing elevates the process by mimicking actual attack scenarios. Penetration testers delve deeper, striving to exploit intricate attack vectors that automated evaluations may overlook, such as business logic errors or multi-step exploits. Skilled penetration testers adopt an adversarial perspective, seeking inventive methods to circumvent defenses and reveal any latent vulnerabilities that automated tools might neglect.

For APIs, penetration testing frequently encompasses situations that extend beyond conventional security protocols. Testers may evaluate how the API manages boundary cases in user inputs, probing for improper validation or unforeseen behaviors that could facilitate injection attacks. Thorough testing scenarios also encompass credential stuffing, token manipulation, and assessments of multi-tenant architectures, which are especially pertinent for SaaS offerings. By merging automated scanning with focused manual testing, organizations establish a multi-layered defense that is robust against a diverse array of attack vectors.

iii. Integrating Security Testing into CI/CD Pipelines

As organizations increasingly adopt DevOps practices, embedding security testing within CI/CD pipelines has become imperative. Conducting security assessments at every phase of deployment aids in identifying vulnerabilities during development, thereby reducing expensive post-deployment corrections. Tools such as OWASP ZAP, Burp Suite, and SonarQube can be seamlessly integrated into CI/CD workflows, executing tests automatically with each code commit or deployment. This strategy ensures that security is woven into the development lifecycle rather than treated as an afterthought, leading to more secure code and reduced delays.

6. Monitor and Log API Activity

Even the most fortified APIs can be vulnerable to breaches without persistent surveillance. Documentation and surveillance furnish insights into real-time API interactions, enabling organizations to identify irregular patterns and take action against suspicious activities before they escalate. Research from Snyk indicates that early identification can decrease breach response expenses by close to 40%, underscoring the importance of preemptive monitoring. Ongoing surveillance also empowers organizations to comply with regulations such as GDPR, HIPAA, and PCI-DSS, which frequently necessitate comprehensive logging of user interactions and data access.

i. What to Log: Essential Indicators of Anomalous Activity

Monitoring API activity entails documenting a range of actions, yet specific indicators deserve extra focus due to their correlation with prevalent attack methodologies:

- Failed Login Attempts: Multiple failed login attempts may indicate credential-stuffing attacks or brute-force efforts to compromise user accounts.

- Unusual API Requests: Analyzing the frequency, source, and types of API requests facilitates the rapid detection of anomalies. For example, an abrupt increase in requests from a single IP could suggest a DoS attack.

- Sensitive Data Access: Monitoring access trends to sensitive information can expose insider threats or unauthorized access. Keeping track of when, how, and who accesses sensitive endpoints is vital for recognizing potential data breaches.

- Rate Limit Breaches: Rate limits are designed to prevent misuse, but persistent breaches can signal bot attacks or attempts to overwhelm the system. Logging rate limit violations can unveil these trends at an early stage.

ii. Utilizing an API Gateway for Enhanced Monitoring

API gateways are crucial in managing and securing APIs, providing a unified approach to implementing surveillance and logging. Platforms such as AWS API Gateway, Apigee, and Kong enable organizations to establish detailed monitoring, enforce rate limits, log access, and filter traffic according to predefined security policies. By centralizing these controls, API gateways deliver a comprehensive overview of API usage, allowing IT teams to respond swiftly to threats.

API gateways also facilitate alerting and automated responses, which can further streamline monitoring. For example, if an API gateway identifies an unusual spike in request frequency or a repeated access attempt to a sensitive endpoint, it can notify security teams or even execute pre-configured actions, such as temporarily blocking the offending IP address. This immediate responsiveness aids in ensuring continuous protection and mitigates damage before escalation occurs.

iii. Integrating SIEM for Advanced API Logging

For extensive environments, incorporating a Security Information and Event Management (SIEM) system can bolster documentation and threat identification. SIEM systems, such as Splunk, IBM QRadar, and Elastic SIEM, consolidate API logs from diverse sources and apply sophisticated analytics to detect patterns indicative of threats. By correlating API logs with data from other sources (including network and application logs), SIEM systems can identify complex, multi-stage attacks that might be overlooked if API logs were analyzed in isolation.

Sophisticated SIEM implementations can leverage machine learning to detect subtle anomalies in API usage, such as access patterns that deviate from usual behavior. This predictive capability fosters proactive threat identification and enhances overall security posture.

7. Web Application Firewall (WAF): Adding a Layer of Protection

While an API gateway oversees API-centric traffic, a Web Application Firewall (WAF) safeguards against web application vulnerabilities by analyzing and filtering HTTP requests. WAFs are particularly adept at mitigating prevalent threats such as SQL injection, cross-site scripting (XSS), and cookie tampering. A 2023 Akamai report indicates that deploying a WAF can decrease web application vulnerabilities by as much as 70%, establishing it as an essential component in the API security framework.

Notable WAFs like AWS WAF, Cloudflare WAF, and Imperva WAF provide functionalities that can be customized for API protection:

- Deep Packet Inspection (DPI): By examining packet data beyond just headers, WAFs can identify malicious payloads concealed within requests. DPI is particularly critical for blocking sophisticated injection attacks or malformed requests aimed at exploiting system weaknesses.

- Bot Mitigation: A growing number of WAFs now integrate bot management solutions that assist in differentiating between authentic users and automated bots, which frequently serve as the origin of credential-stuffing and brute-force assaults.

- Custom Rules and IP Reputation Analysis: WAFs empower organizations to establish custom rules based on traffic behavior, IP reputation, and geographical location. These rules enhance control over who can access the APIs and in what manner, automatically blocking traffic from suspicious sources.

8. Follow The Principle Of Least Privilege (PoLP)

The Principle of Least Privilege (PoLP) emphasizes the necessity of providing users and applications with only the essential permissions required to accomplish their functions, thereby reducing the likelihood of unauthorized access or exploitation. Although this concept may appear fundamental, neglecting to implement PoLP has been associated with a notable number of security breaches. Indeed, IBM Security discovered that insider threats frequently stemming from excessive permissions, constitute approximately 20% of all security incidents.

Accounts with excessive permissions can result in unintended data exposure, privilege escalation, and even deliberate misuse by malicious insiders. Compliance with PoLP is particularly vital in the context of APIs, where exposed endpoints and detailed permissions imply that a single over-privileged user could inadvertently or maliciously access or alter sensitive information.

i. Implementing PoLP in API Security: Optimal Procedures

- Role-Based Access Control (RBAC): RBAC allocates permissions based on predefined roles rather than individual identities. Within an API framework, developers, users, and applications can be assigned distinct roles that delineate their access privileges, mitigating the risk of undue permissions. Roles such as 'viewer', 'editor', and 'admin' can be personalized to reflect distinct API functions, and any adjustments to user roles can be supervised from a consolidated platform.

- Attribute-Based Access Control (ABAC): Advancing beyond RBAC, ABAC assesses attributes like user location, time of access, and device type to make adaptive access determinations. This approach allows for a more granular level of control, particularly advantageous for applications with contextual restrictions. For instance, an ABAC policy may limit access to particular API endpoints based on the user’s department, IP address range, or time of day.

- Access Expiration Policies: Temporary permissions should be allocated whenever feasible. Setting expiration dates for access tokens after designated timeframes (using JWTs, for example) can deter the misuse of privileges long after they are deemed unnecessary. This is particularly essential in testing environments, where developers may require temporary elevated access for diagnostic purposes.

- Regular Permission Audits: Performing regular audits assists in identifying and eliminating outdated or superfluous permissions. Automated auditing tools can analyze role definitions, usage patterns, and pinpoint accounts with excessive privileges. When combined with logging and monitoring systems, this allows to locate the deviations from standard access behaviors and conduct further investigations if warranted.

- Zero-Trust Policies for APIs: Implementing zero-trust principles necessitates the verification of each request prior to granting access. A zero-trust API gateway, for instance, authenticates user identity, role, and context for each request, ensuring that only sanctioned actions are executed. Zero-trust APIs also depend on session revalidation to prevent session hijacking, rendering them a robust solution for secure environments.

Get Started With Blluella Today

Isn’t it time to take the worry out of API security? Contact Bluella to learn more about how our solutions can seamlessly integrate with your existing architecture, strengthen your API defenses, and give you peace of mind in an increasingly complex security landscape. Let’s work together to turn API security into one of your greatest assets.